Highly available Pi-hole setup in Kubernetes with secure DNS over HTTPS DoH

Previously, I wrote an article about how to set up two or more Pi-hole instances using Docker Swarm, which has worked quite well for me up to this point. I've since decided this setup was too easy and wanted to move from Normal to Hard mode and bring Kubernetes into the mix.

Welcome! I've been a fan of Pi-hole for some time now. Not only is this an incredibly useful project, with great community support, but it was also my first Homelab project. With an undisclosed amount of time and money later, I'm still going strong with many projects and a rack full of hardware. For those in the know, having a Homelab looks a lot like a side gig as an IT consultant / Platform Engineer in my own home 😀. To add insult to injury, I don't get paid for this; it costs me money. But I digress.

Previously, I wrote an article about how to set up two or more Pi-hole instances using Docker Swarm, which has worked quite well for me up to this point. I've since decided this setup was too easy and wanted to move from Normal to Hard mode and bring Kubernetes into the mix. Not only that, but I wanted true high availability. It was hard at first, but once you cross that initial learning threshold and bring in some tools to help, you'll be glad you did. I'll assume you are new to Kubernetes, or at least new to running it on your own metal (like I was), so we'll start there.

Kubernetes with K3s

Install k3s w/ etcd to support high-availability

I've found this a dead simple, effective, and powerful way to start at home. K3s is an open-source, well-maintained, well-documented, compliant distribution of Kubernetes that is lightweight and designed to run on various hardware and environments...Including your old laptop or an ARM-based Raspberry Pi 4. I'm using a mix of Proxmox VMs running on some Dell hardware and 4 Raspberry Pi 4's running SSDs (not SD Cards). You can start like I did and use the k3s install script.

Prerequisites

- At least three physical computers (recommended) or virtual machines with dedicated IP addresses. The more physical separation, the more resilient your setup will be. If you use Pi's for your k3s server nodes, you'll want to use USB SSDs or NVME; SD cards will be too slow using the embedded etcd DB.

- Some familiarity with Linux, ideally Ubuntu, and comfortable running commands in your favorite terminal.

- A text file, notes app, or something to keep track of commands, IP addresses, and configuration.

Let's install k3s

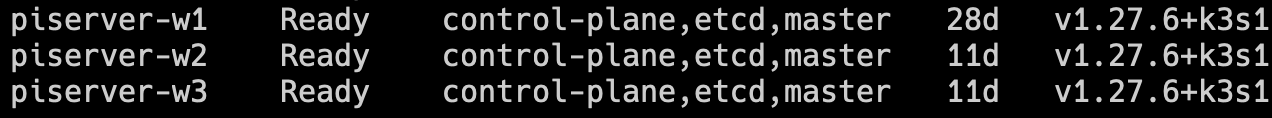

Check your cluster

Let's make sure your cluster is up and running. There are many ways to do this; primarily, you'll need the kubectl CLI, which is how you'll interface with k3s. Once that works, we can move to some cool visual tools. Install the following tools and copy the Kubernetes config you captured earlier (/etc/rancher/k3s/k3s.yaml on server-1) to your working directory or the default Mac directory ~/.kube/ with the filename config (no extension)

Now run kubectl get nodes and you should see an output like this:

High availability and Load balancing with kube-vip

Now that your cluster is resilient, meaning that your control plane can tolerate one of the three servers failing (or being down for maintenance), we can move on to installing and configuring kube-vip. With kube-vip, we can load-balance our services and add high-availability access to the control plane! This works natively in your cluster; no additional VMs or services like HAProxy or Keepalived are required.

Install kube-vip via helm

Create your values file to customize the chart:

Install the chart with your configuration on your cluster

helm install my-kube-vip kube-vip/kube-vip -n kube-system -f kube_vip_values.yaml

Once installation is successful, you can now modify your local kube config ~/kube/config to connect to your VIP 192.168.1.100.

If kube-vip does not start, additional server configuration may be required.

Ensure ip_vs modules are loaded:

Pi-hole

Deploy Pi-hole w/ DoH support via helm

Okay, the hard part is over. k3s is up and running, and we're now ready to deploy Pi-hole, enterprise style! Thankfully, there is a strong community around Pi-hole, filled with homelabbers like us who have already built and actively support a way to do this easily via Helm. Think of Helm as a package manager for Kubernetes. Helm packages are called Charts, and we will install one for Pi-hole, which you can check out here on GitHub.

First, let's add the chart repository so that we'll be able to deploy it using the helm command:

Before we install the chart, let's customize our installation. You can do this by setting/overriding the chart's available values. For this chart by mojo2600, you can see some recommended values in the README and the full values file in the chart here.

Start by creating your own values file in your working directory, called pi-hole-values.yaml. I'll break it down by section and then pull it all together at the end.

Configure DNS

We need our pi-hole DNS service available outside the cluster so that we can assign it via DHCP and use it to start blocking ads across the network.

Configure DoH

We want to ensure all of our upstream DNS requests use DNS over HTTPS (DoH) via a Cloudflare tunnel.

Configure Pi-hole web admin

We want to ensure we can access the web interface using a local IP Address or local DNS. This helps with viewing logs and manually running backups.

TIP: Consider using a persistent volume storage provider, like NFS or Longhorn, for your pi-hole volume. I highly recommend it, but I won't cover it in this walkthrough. The good news is that once you decide to add a PV storage provider to your cluster, it will be very easy to move Pi-hole over to it via a helm upgrade. For now, ensure you backup via teleporter regularly or leverage something like Orbital-Sync, to synchronize your configuration between this instance and perhaps a pi-hole server running directly on a Raspberry Pi.

Pulling it all together

Here is our complete pi-hole-values.yaml configuration file we'll use when installing the chart:

Install Pi-hole chart

I'm using the helm upgrade command with the --install flag, setting my release name to my-pihole (choose your own), the repository and chart name mojo2600/pihole, and finally, our custom values configuration file -f pi-hole-values.yaml

helm upgrade --install my-pihole mojo2600/pihole -f pi-hole-values.yaml

Successful deployment checklist:

kubectl get deploymentsshould showmy-piholeas ready and available.http://pihole-k3s.mylocaldomain.org/adminloads the web interface and acceptsmy!admin3passwordto login.dig @192.168.1.100 google.comanswers successfully on port 53

Appendix

Helpful resources on your Pi-hole and Kubernetes journey

Get visual with Lens

An outstanding Kubernetes management interface that is free for personal use. It's saved me quite a few times and helped me learn Kubernetes. I'm sure it will help you as well: Install Lens Desktop - Lens Documentation (k8slens.dev)

Is the internet down?

Homelabs are fun, but stuff happens. Do yourself a favor and set up a separate static install of Pi-hole on a cheap Raspberry Pi, and make this your secondary DNS server. I know we just worked hard to make Pi-hole highly available, but let's face it: you have a day job. So now you have two separate ad lists, whitelists, local dns, etc, to manage, which is where orbital-sync comes in. It's dead simple to set up on that same raspberry-pi via docker-compose. It creates a backup of your "primary" pi-hole, and restores that backup to any number of "secondary" pi-holes. You'll thank me on this one.

Join and Subscribe

For help, fun, and inspiration