Load balanced highly available Cloudflare tunnels with Docker Swarm

It's to set up your secure tunnels directly to Cloudflare on bare metal or within virtual machines. Why stop there? Let’s make them easier to manage and highly available by containerizing multiple tunnels across several physical devices

In previous posts, I've shown how easy it is to set up your own secure tunnels directly to Cloudflare on bare metal or within virtual machines. Why stop there? Let’s make them easier to manage and highly available by containerizing multiple tunnels across several physical devices while leveraging Cloudflare to load balance your ingress traffic. For those with an existing Kubernetes or Docker Swarm cluster, which I recommend you do, this should help you get started in that direction. There are many reasons to go this route, mainly more portability and flexibility as your homelab evolves over time. I'm keeping things simple and using Docker Swarm for my home setup. Why not Kubernetes? Well, it's more complex to get up and running for starters, and for my homelab, the flexibility of using compose files suits me. I have a Rancher cluster I've set up to play around with Kubernetes, but that is for another post. With that said, I introduced some complexity by setting up two tunnels in a load-balanced configuration, complete with a health check...because what's better than one tunnel?

Before spinning up the compose file, you'll need to authenticate, create, and register each tunnel with Cloudflare via the CLI and build your config file, which contains your ingress rules. See my cloudflared tunnel post for how to set that up. Once done, copy the necessary files needed by the docker-compose file to be locally accessible when deployed. Given these are one-time steps, I decided not to automate them or bake them into a custom container build, though you could absolutely do that. Perhaps I'll set that up at some point to demonstrate.

The following docker-compose should work in Docker desktop and be deployed as a stack in Docker Swarm. You'll see that it's using Docker Swarm's shared config to store my ingress rules and shared secrets to keep my credentials and certificates. I deploy all of this using Github actions to my Portainer orchestrator on a PR merge to my main branch. Yes, I'm keeping secrets in Github, which I plan to remedy down the road with something like Hashicorp Vault. To keep things simple, first, test this with a docker-compose up. Once tested and verified, you can deploy it to your swarm cluster.

Note. swarm-config and swarm2-config should have identical ingress rules. For example:

Deploy your stack

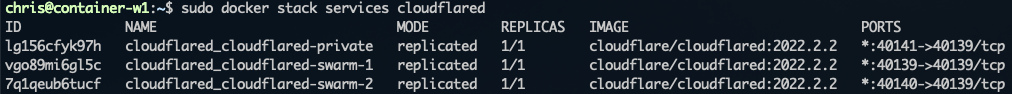

On your Manager node, copy over your compose and all referenced configs/secrets, and run docker stack deploy --compose-file docker-compose.yml cloudflared. To verify that your two services are running, docker stack services cloudflared. If everything is working at this point, I highly recommend removing those local files and setting up an automated deployment or using something like Portainer, that can pull and deploy your stack directly from Github.

Cloudflare LB Setup

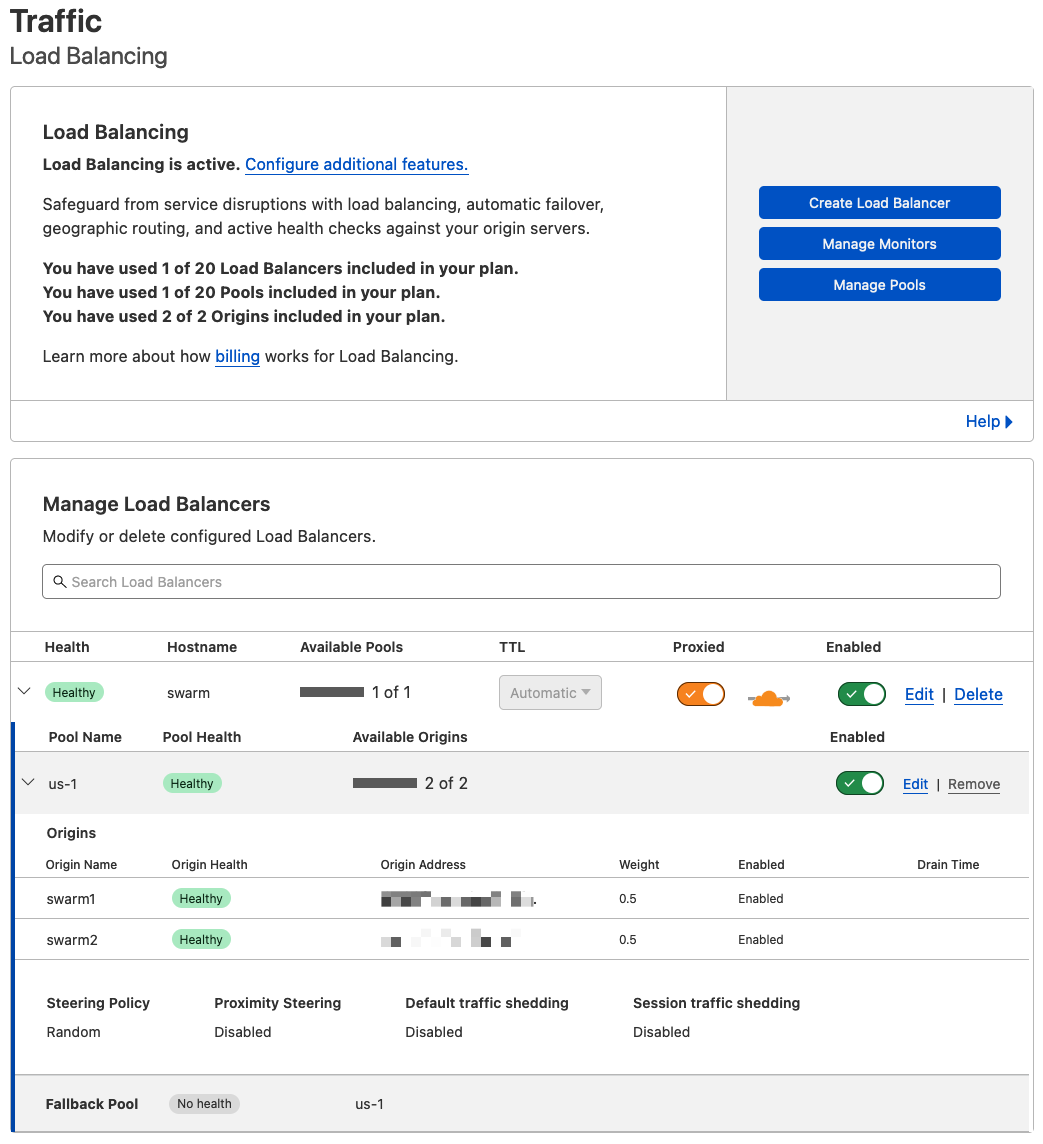

Traffic -> Load Balancing -> Create Load Balancer (paid feature)

Each tunnel you created in the first step was assigned an origin address, which you'll use here for the two orgins in your load balancer. I've set each to 50%, alternating each request evenly between the two tunnels. The hostname of your load balancer will be the endpoint that you can use for other CNAMEs as you add ingress rules for local services you want to host or expose. To ensure your LB groups show as healthy, add in the healthcheck endpoint defined in your ingress rules, which should look like this https://lb-hostname.domain.net/check I find this setup to be my preference vs hosting my own Traefik proxy (or similar) since I do not need to open up any ports on my firewall.

That's it; you are load balanced! For high availability, you'll need at least two devices running 24/7. For example, two Raspberry Pi's running Docker engine with swarm enabled.