Run a free AI coding assistant locally with VS Code

Wouldn't it be awesome to use a local LLM as your personal AI assistant on the daily, subscription-free, completely offline, and even in VS Code? Type "y" to continue.

Like many of you, I've been playing, using, and frankly relying on services like ChatGPT and CoPilot for some time now. Though it's only been a year since these services were generally available, it's hard to imagine living and working without them. I realize that for those of us not in tech, this may not be the case yet, but it's only a matter of time. It's not exactly cheap to use these services: $20/month for ChatGPT Premium ($30/seat for Teams), $10-$40/month for GitHub CoPilot, $20-$30 for Office 365 CoPilot, $20/month for Gemini, Claude, Grok, and the list goes on. I say this fully knowing that it's worth it. I wouldn't suggest paying for all of them, but I've been a day one subscriber of ChatGPT premium and GitHub CoPilot, and I plan to continue.

So paid online AI assistants are awesome, but it's not all the awesome. That is where Hugging Face comes in. Think of it like the GitHub and, to some extent, the app store of open-source AI. You can browse, download, and even use various open-source LLMs on the site. They even have a ChatGPT-style app called Hugging Chat that allows you first to select the model you want to use, and then you can start interacting with it as you would any other multi-modal AI assistant. They also have their version of "custom GPTs" called Assistants. Now, Hugging Face is most certainly geared toward developers, which brings me back to the topic of this post. Wouldn't it be awesome to use these models on the daily, subscription-free, completely offline, and even in VS Code? Type "y" to continue.

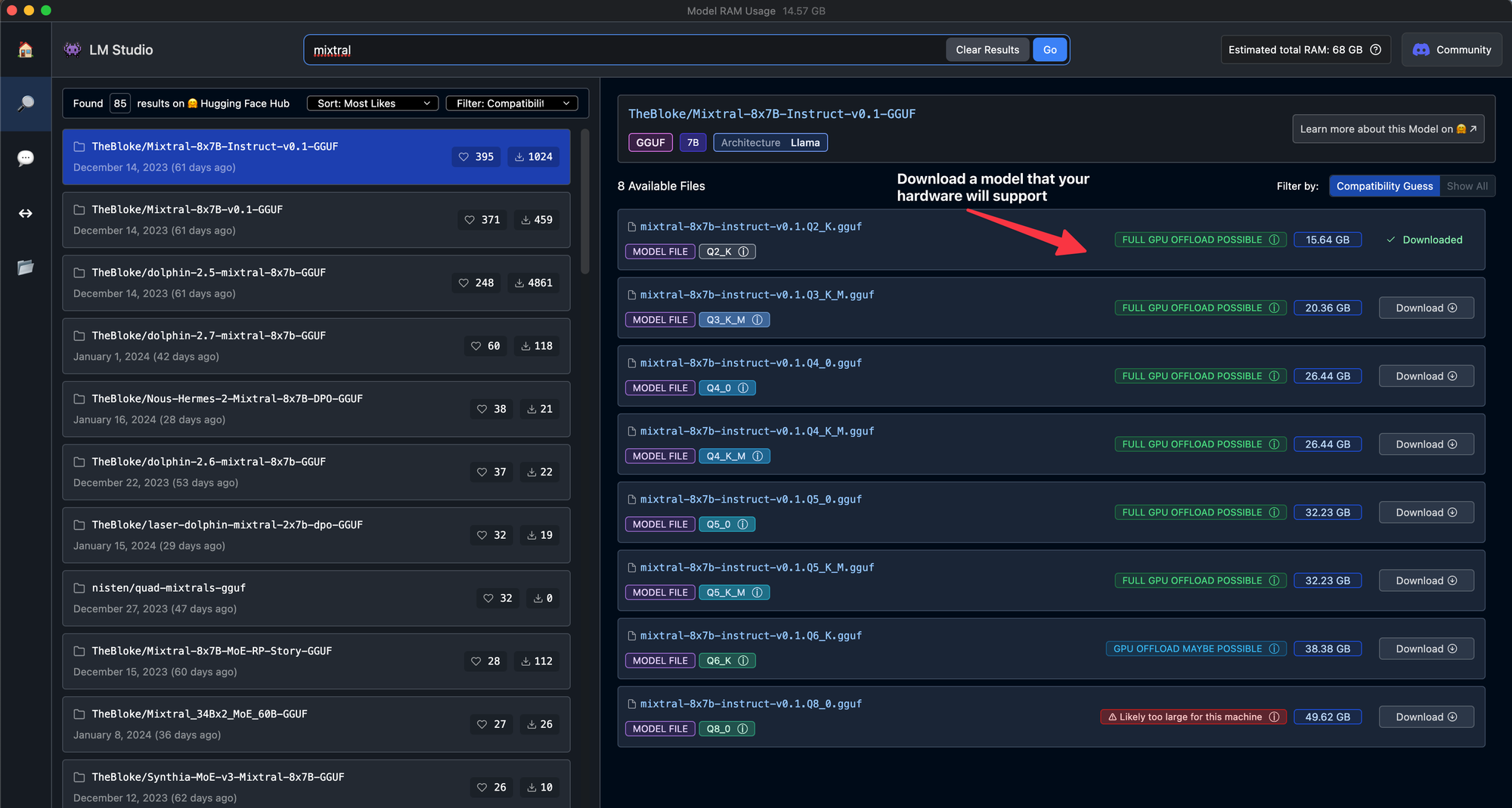

Install & Configure LM Studio

LM studio is a rapidly improving app for Windows, Mac (Apple Silicon), and Linux (beta) that allows you to download any model from Hugging Face and run it locally. There are a couple of options: 1) You can load a downloaded LLM and interact with it like any other chat-based assistant. Several built-in system prompts and options allow you to customize the performance and output format, use experimental features, and many other things. 2) this is the part we'll focus on: you can load that same model, and LM Studio will wrap a local API proxy around it to mimic the OpenAI API. It is very cool, given many SDKs and, importantly, Editor & IDE extensions already support OpenAI.

Caveats

You need some heavy metal to run this locally. Any modern computer should work, but for this method to shine, you're talking about an M2/3 Max/Ultra with higher specs or a Windows PC with a modern CPU, lots of RAM, and an RTX30/40 series GPU (with lots of Vram). I'm running a fully loaded M2 Max MBP to safely utilize over 50 gigs of RAM (M2 SoC uses shared memory with GPU), plus Metal support, producing some nice results. Thankfully, many ram/vram-friendly options exist, even with some of the latest models. LM Studio does a nice job estimating what your rig can run as you browse and download models. Generally, the larger the model file and resource requirements, the higher the output fidelity. I wouldn't push it to the max, but push it up if you can.

Recommended models

Using the following models, I've received solid and consistent results with both chat and coding. Your mileage may vary:

Test each model and find one that produces code to your liking using the AI chat tab in LM Studio before moving on to the next step

Use your model with VS Code

We'll set up VS Code to work with similar functionality to GitHub CoPilot.

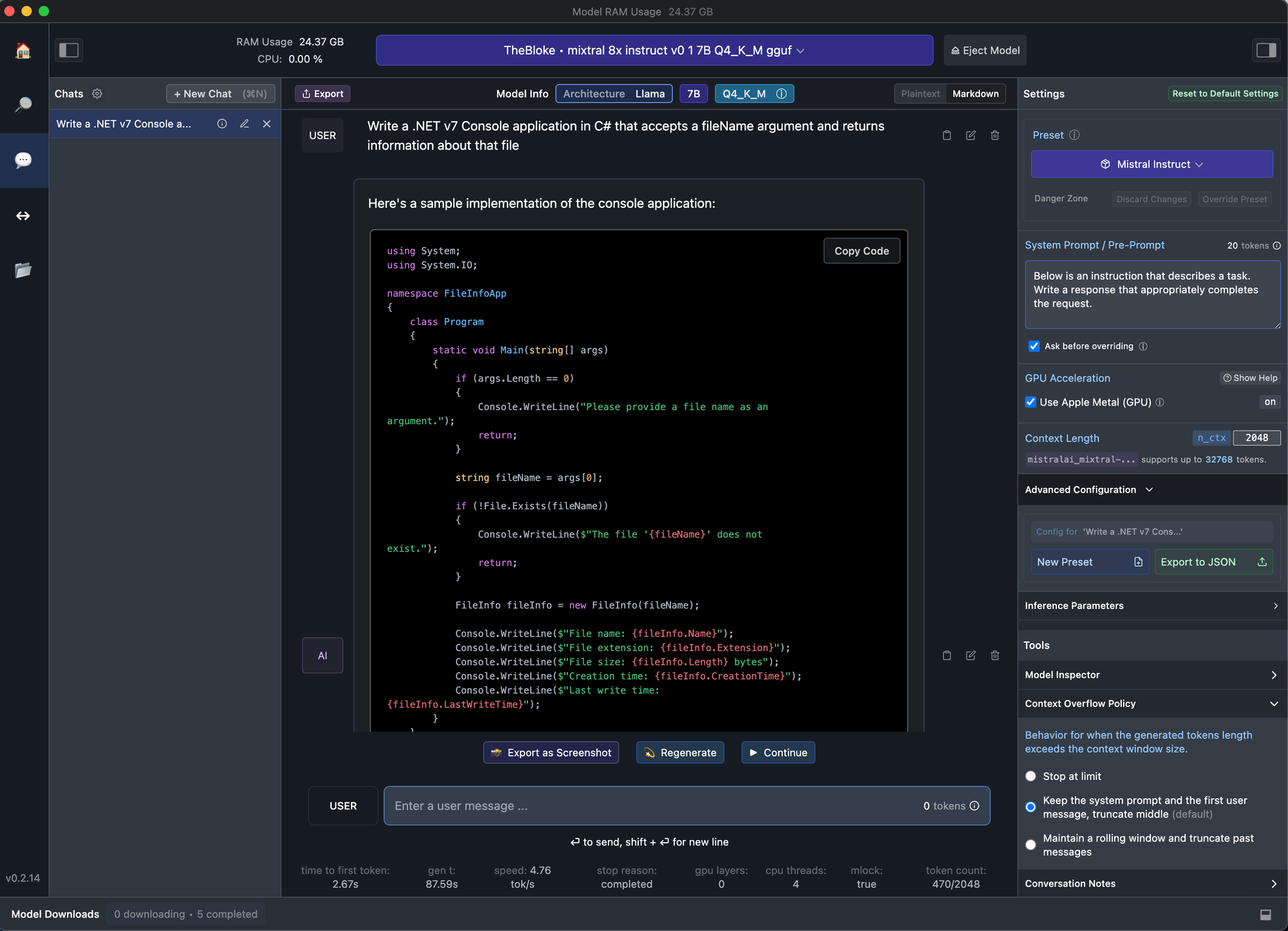

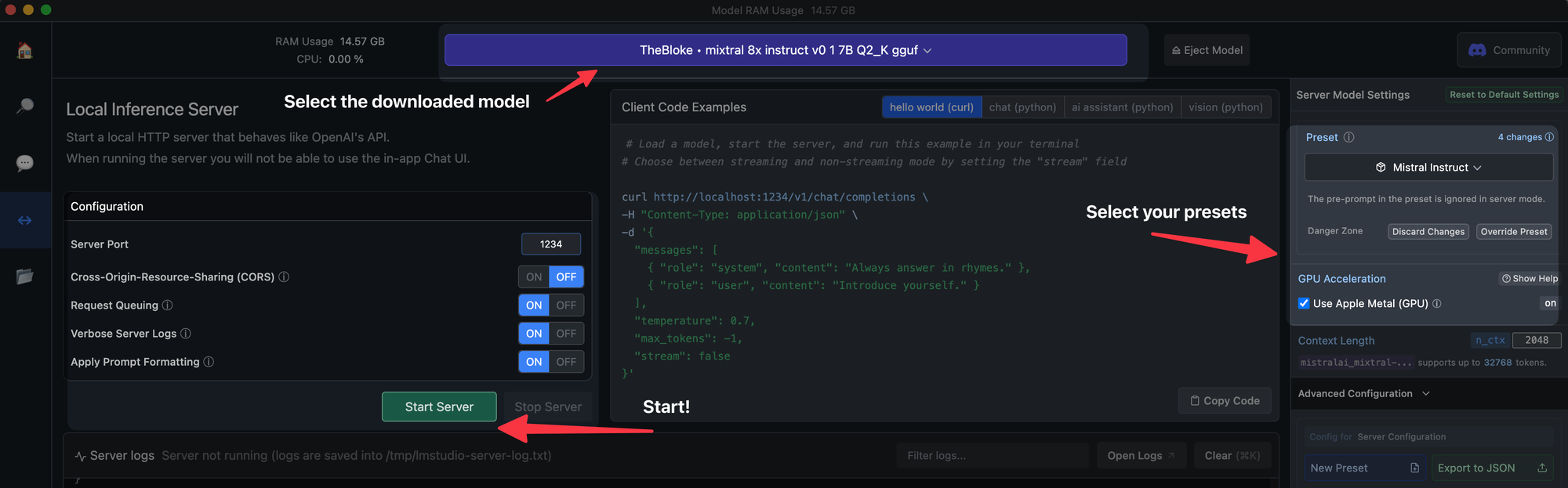

Start the Local server in LM Studio

This is as simple as selecting one of your downloaded models and hitting the Start Server button. The default options should work well, but tweak them to your liking.

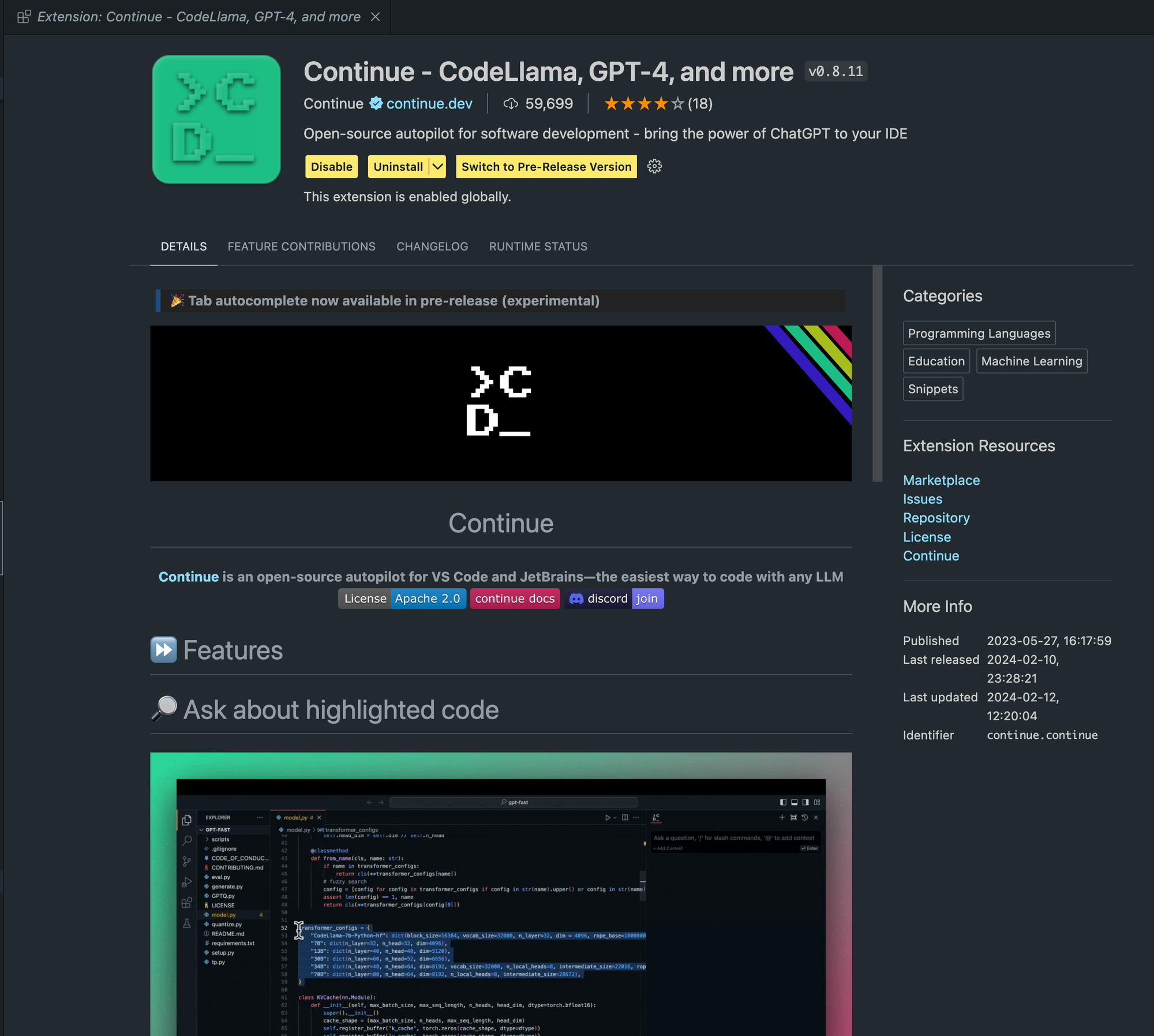

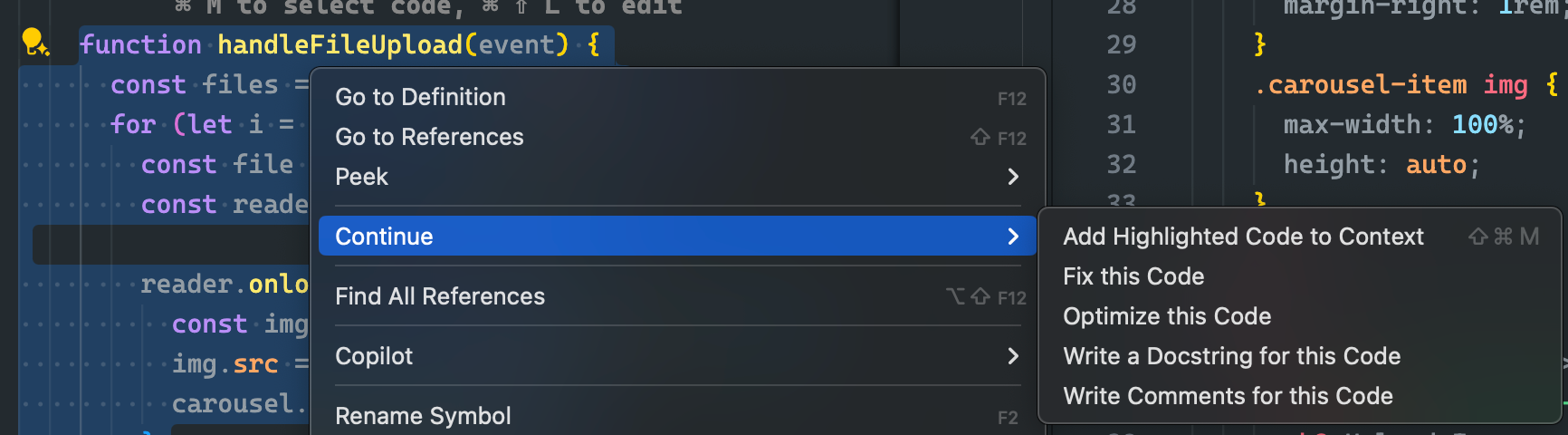

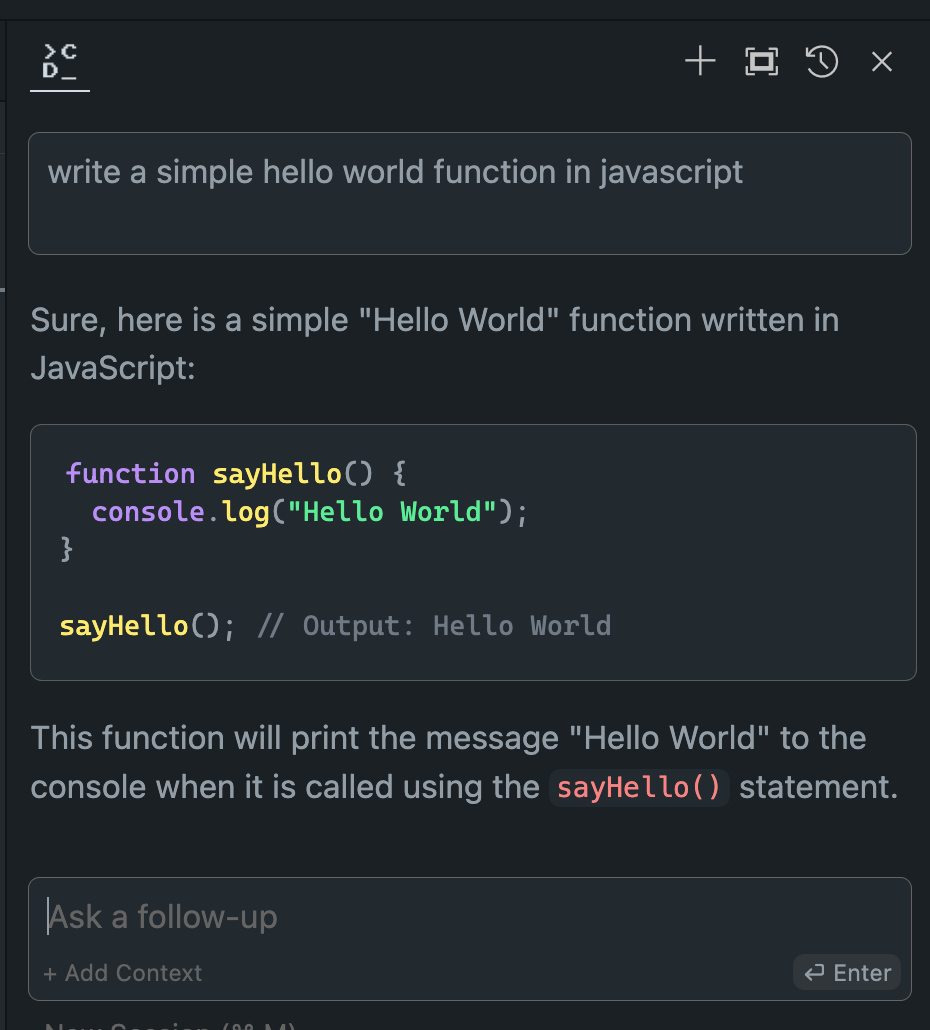

Install and configure the Continue extension

Browse the extensions marketplace and install Continue.

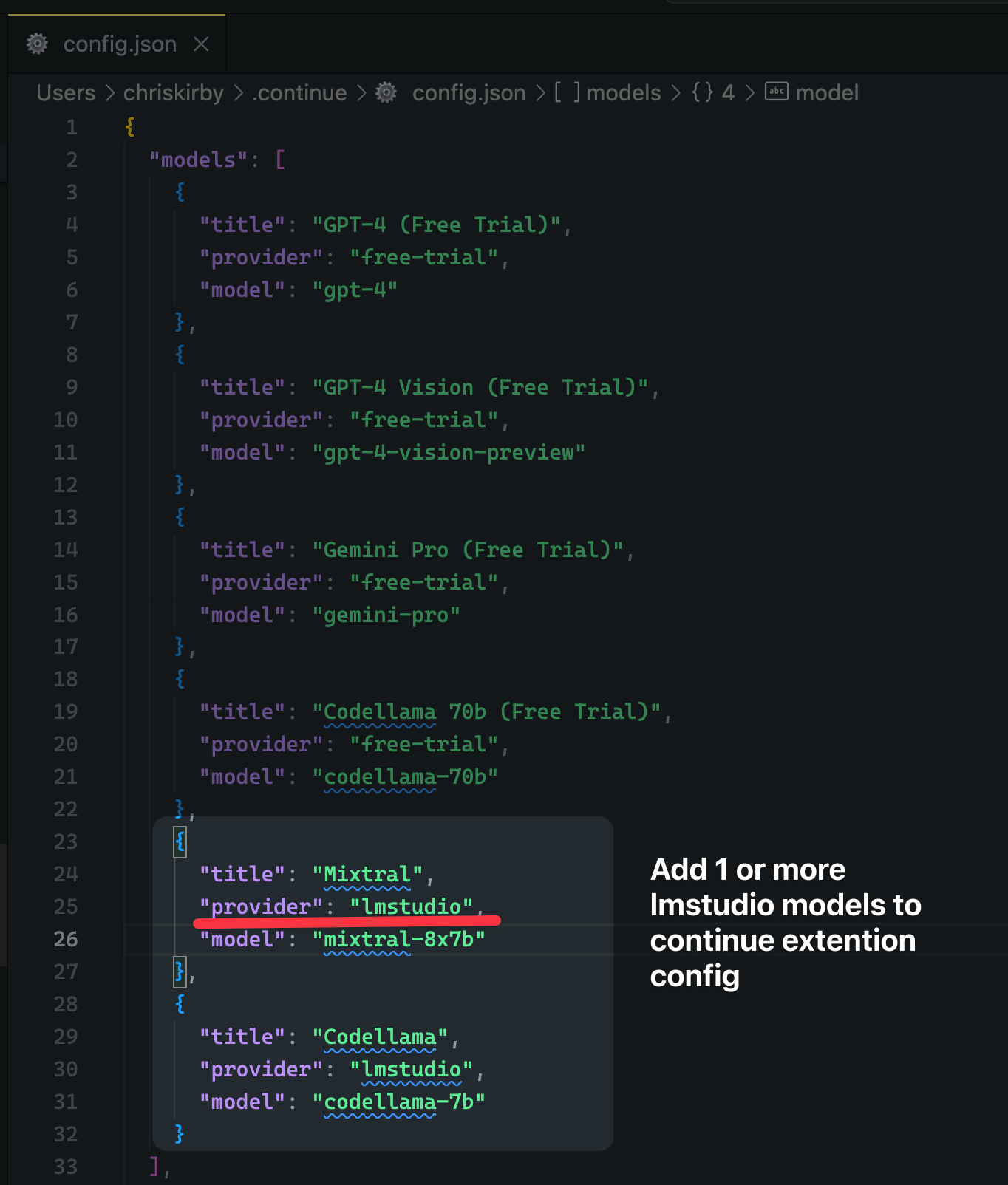

Modify Continue's configuration JSON to add LM Studio support.

Work with your local model inside of VS Code.

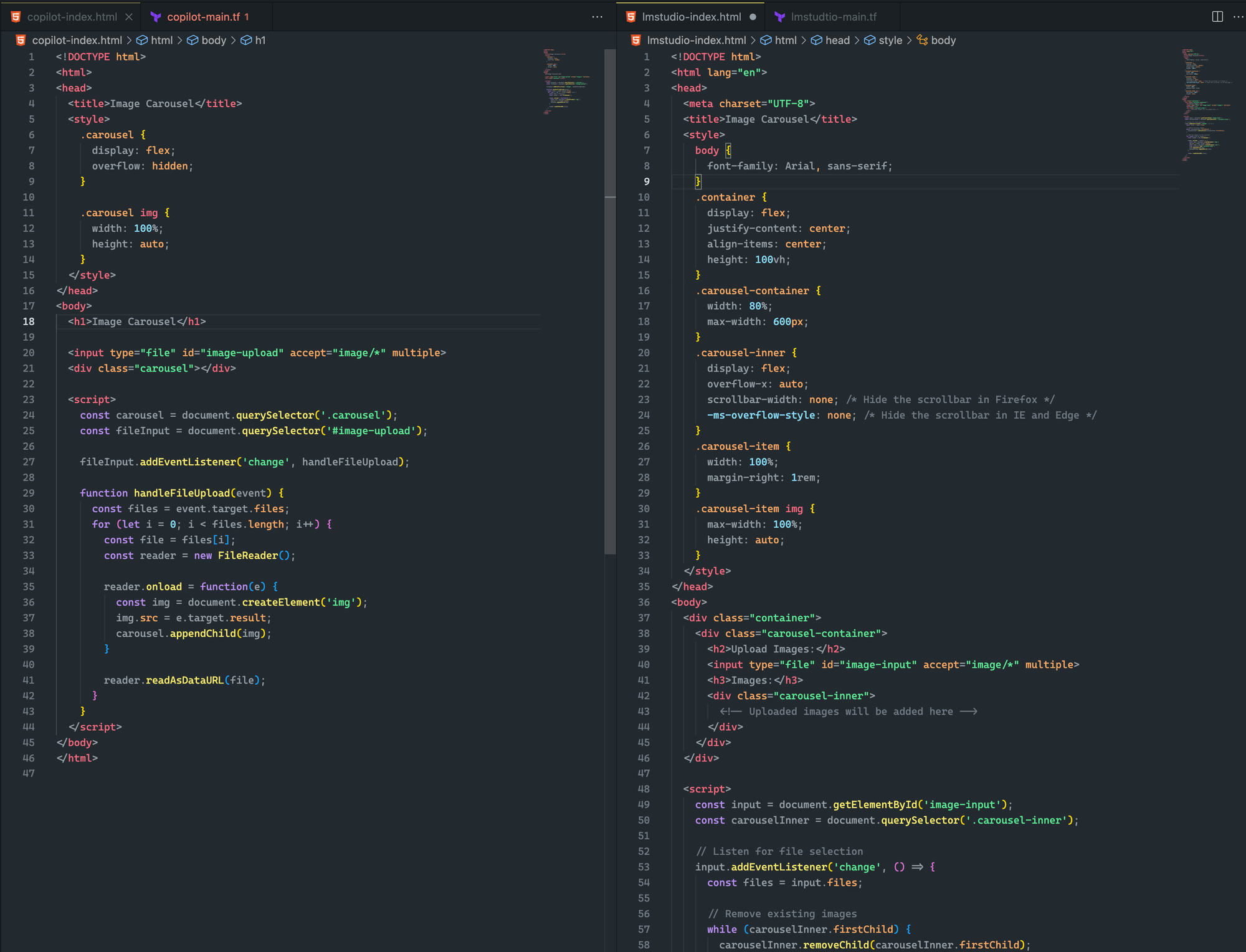

Comparing local models to CoPilot

Let's see how it compares! Spoiler: it's pretty comparable, if not slightly better, in some of my testing.

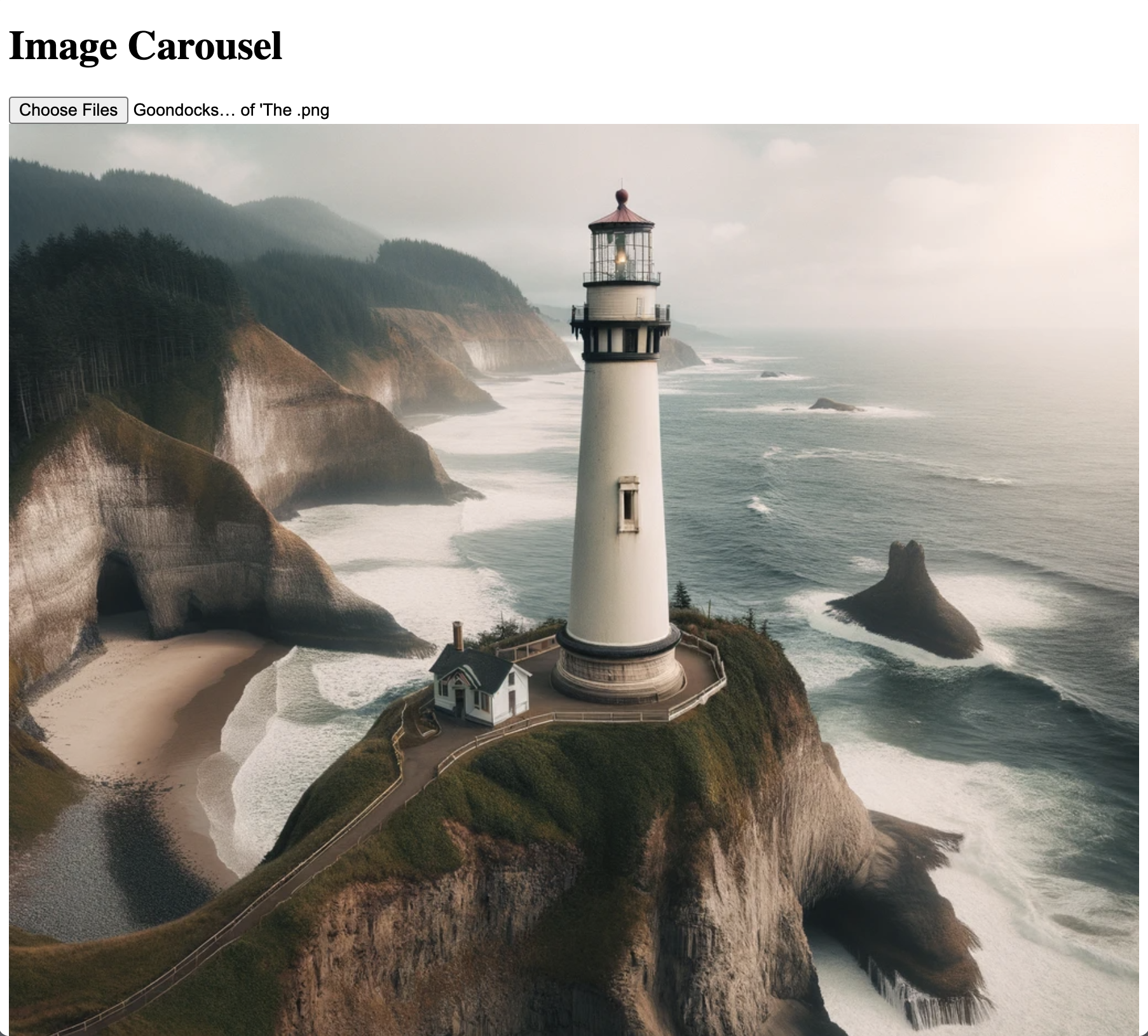

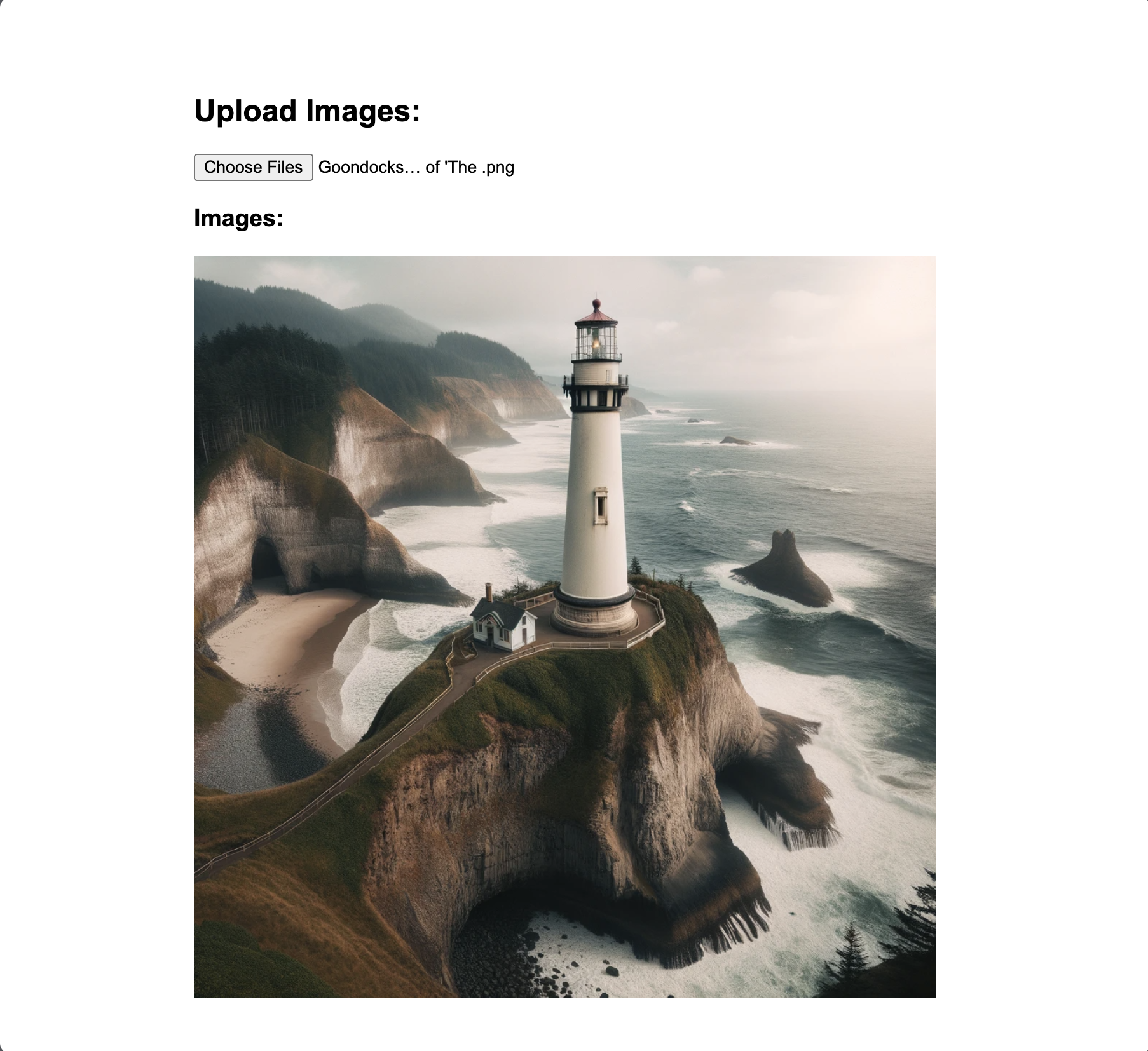

write a single html file that allows you to upload images, then display all of the images in a carousel. use only web technologies that can run in the browser

Pretty close. Neither produced an image carousel, but I could upload an image, and it rendered in both examples. I'm sure with more prompt work, I could get a better result.

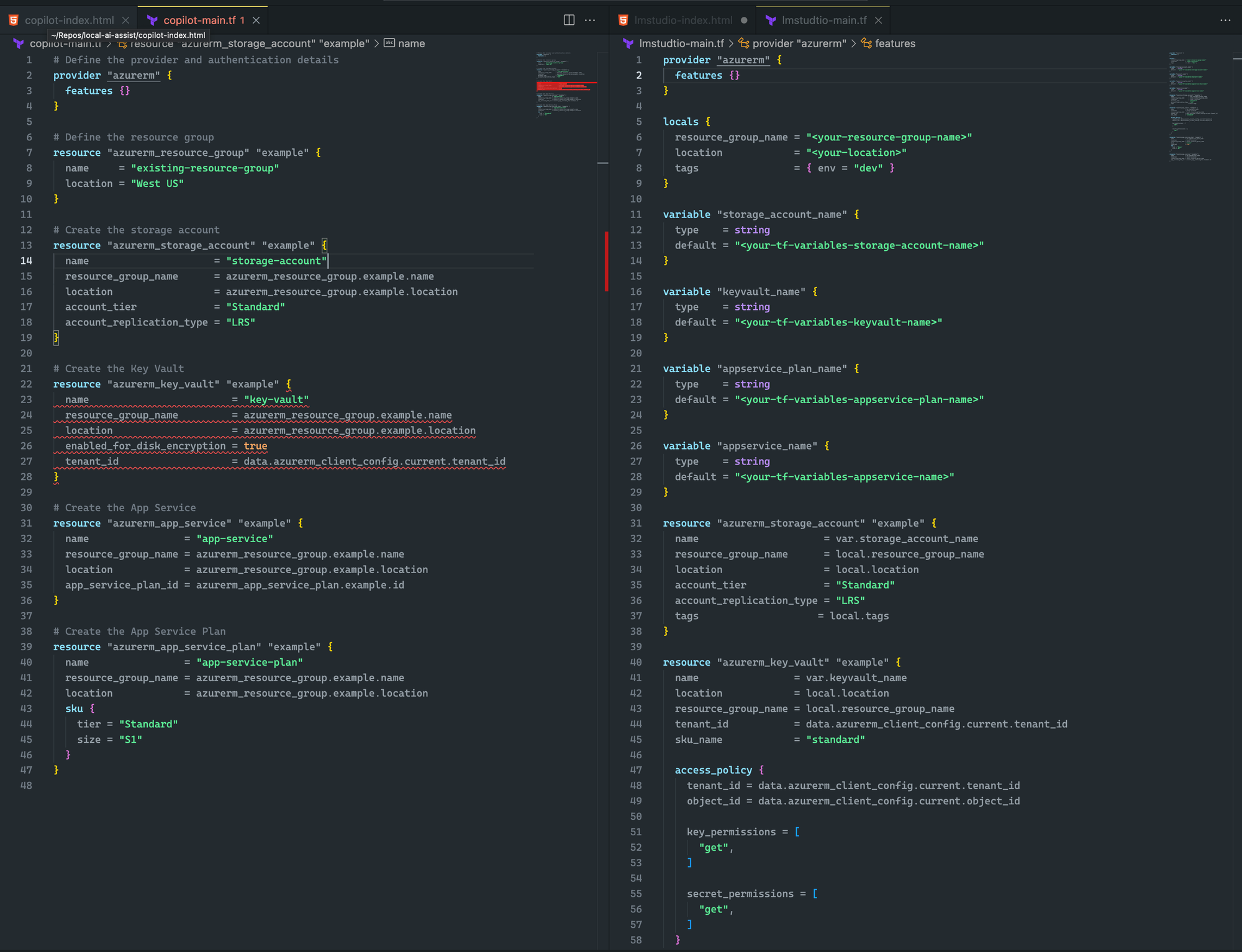

write a single terraform file that creates a new azure storage account, azure key vault, and azure app service, that will reside in an existing resource group

I give a slight edge to LM Studio and Mixtral here, as the Terraform is not only more complete, but it's also lacking syntax errors. Granted, CoPilot has one small error in which the key vault declaration is missing the sku_name, which is easily corrected.

Have fun! 🎉