The Joy and Agony of Building with AI Agents

I'm increasingly leveraging agentic AI tools—tools you can now interact with and act on your behalf directly inside your code editor. Let's talk about how to use, and not use, these incredible tools to be more productive and write code you would be proud to ship.

As I work on my Home Lab, various open source projects, and projects for work, I'm increasingly leveraging agentic AI tools—tools you can now interact with and act on your behalf directly inside your code editor. I primarily use Cursor and VS Code w/Copilot, which are both developing interactive agents to improve coding productivity. Claude Code is also impressive and worth a mention as well. These agents can leverage the latest models from, Anthropic, and others to write code and execute commands directly in your code editor. In many ways, it's the interface you are already familiar with in ChatGPT or Claude, but now, with the ability to automatically gather context from your codebase, create new files, run unit tests, anything you can do manually in your editor or via the CLI. This is incredibly powerful, amazing even...at first. Now, you can certainly "vibe" your way to something, but because we are using natural language with all of the limitations and unpredictability of LLMs, you must know what questions to ask, challenge assumptions, and define consistent context and rules to achieve better, more consistent results. In the hands of a skilled operator (or aspiring, in my case), you can genuinely create code you would feel proud to ship 🐿️. I'm still learning daily, but I wanted to stop and share some of what I've learned thus far in the hope that it may also help you on your journey to developing this vital new skill.

Set the ground rules

With all LLMs, your outcomes are only as good as the context you provide and the accompanying prompts or instructions. To achieve better consistency, especially with coding, it's essential to define a set of rules that will accompany all of your requests. This is a simple markdown or text file you will add manually or configure to include automatically with every chat request. This will help establish the boundaries and the type of code you would like the agent to produce. Cursor offers a first-class feature called Rules to formalize this and even offers ways to automatically include one or more rule files in your chat session. Copilot offers some conventions for defining your prompt files as well, which can be manually included in your chat session. Simply put, LLMs have vast general knowledge but no long-term memory and minimal short-term memory; think of 10-second Tom from the movie 50 First Dates; every chat is a new introduction. "Hi, I'm Chris". Now, the tools understand this and are getting better at solving this problem every day, but for now, this step is essential to achieving better results. Here are some of my general guidelines, along with some examples:

- Clearly define coding styles and patterns.

"I prefer idiomatic C# using the latest language features","Keep the code DRY". - Describe the purpose of your project

"To make an awesome home assistant custom integration" - Provide repeatable instructions

"Keep a rolling log of each change you make in a file called agent-changelog.md"

Here is an example of a complete global rules file I use when working on my unifi network rules custom integration for home assistant:

There is no substitute for Wisdom, yet

Like very smart and helpful people or perhaps your favorite medical practitioner, agents often focus on treating the symptom and not the root cause. This is by far the most common issue I encounter and where your experience will be leveraged the most. Perhaps you are diagnosing a bug, providing necessary context, logs, and screenshots, and doing all of the right things. However, you must stay diligent. Review the code and ensure the implementation is thorough. Challenge it. "Why are you removing x?", "What about x?". Force it to follow the rules and write better, more thoughtful code. Your best chance of that is when the context is fresh. I reject nearly as many changes as I accept, and you should, too. It's best to be clear about what you want from the start.

Avoid something like

"help me debug this. here is the log:"

Prefer something like

"I see we have a problem with x. Lets try to address this in a targeted way, as all other functionality is working correctly. Let's also ensure that any changes we make are consistent with established rules and patterns. Here is the debug log to help us get started. Analyze and provide recommendations".

The more instructions and intentions you provide, the better and more consistent the outcome.

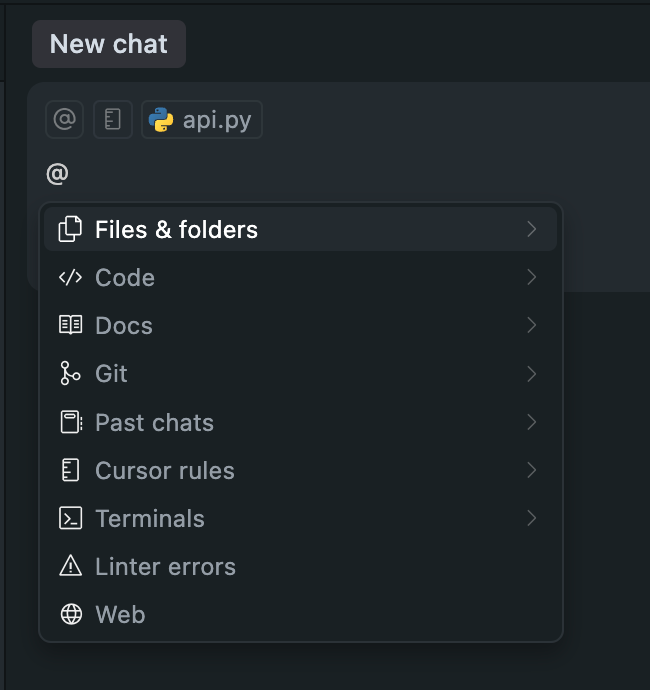

Context is King—Give It Everything, Assume Nothing

Just like rules and prompt files can be used to establish general context, it's vital that you consistently provide relevant context to the agent to get thorough results. Well-written code is complex and uses abstractions, libraries, APIs, and unit tests. A single feature will likely cross dozens of code files. Unless you provide those files, assume it has no clue. Thankfully, VS Code and Cursor make this incredibly easy and are actively improving the tooling for this. Within the agent chat window on the right, you can ➕ files and use syntax like @ or # to include entire folders, individual files, previous chats, and even websites. Do it every time. Missing key context will almost certainly lead to incorrect, incomplete, or duplicate code.

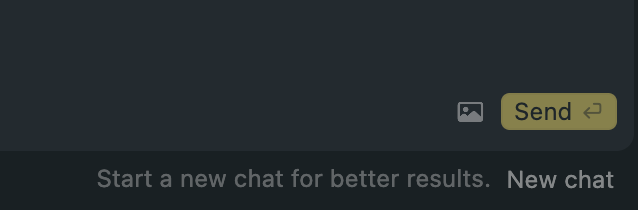

Keep your agent sessions small

While context is critical, as illustrated above, it's also limited. You'll often find diminishing returns the longer you chat, so keep each session small and focused. Once you achieve or even get close to the outcome, stop there and start a new chat. Cursor has a nice feature that will summarize your current session and add that summary as context for the new chat. This will keep your agent sessions more productive. Like a good commit or an easy-to-review pull request. This can be a fine line, so use your best judgment here. The general rule I follow here is big context, targeted ask. Avoid small context and vague asks.

Know when to fold 'em

Agents will take you down a rabbit hole. I've spent hours in a single session just trying to get there. What seems like a simple ask can sometimes be 25, 50, or more steps as the agent "thinks" its way through each prompt. Cursor knows this and stops the agent at 25 steps per prompt, asking you if you would like it to continue. By the end, perhaps you can't keep any of it. Stash it and start over. Sometimes, too much has changed and you've risked existing functionality, too many test are broken. I understand the desire to roll forward because things may be worth keeping. The good news is, YOUR short-term memory is outstanding, so use everything you have learned and hit that plus sign to start a new chat. It will be better this time, and you will keep things small. At the first sign of spiraling, stop it, revert, and go back to the previous prompt and change it. The tools are built in a way to manage the state for each session, so use that. Both Cursor and VS Code have chat history and record each step in the history. This has saved me a lot of time and will save time for you too.

Simple is Hard, Hard is Simple

File, project new, "Create me a new console app with an API endpoint that will summarize today's stock market data". No problem! "Add a new API endpoint to x", whole project destroyed! Boy, can AI Agents overcomplicate the most straightforward thing? You've told it not to, given it instructions, but there it goes again. For lack of a better term, it lacks elegance. Simple, clean code seems hard for it. You can get there, but you have to be very clear and hold it accountable to the rules. I hit the stop button the second it starts to deviate or overcomplicate. I'll sometimes go back and update my rules, too. Then, start again. In times like this, I find just getting in the editor myself and making the changes using inline code complete is faster. Agents aren't the tool for every problem; they can slow you down too. You know how to write clean code, so get in there and show the agent how it's done!

REVIEW, REVIEW, and REVIEW AGAIN

I treat AI-generated changes like a junior dev’s pull request. The changes are just a starting point and can often be implemented more thoughtfully. You are the senior engineer, the gatekeeper, if you will. You have the wisdom, and this is where you should apply it. Don't wholesale accept the changes. Look at each file and each diff; it's worth it. Reinforce your design principles, be additive, and you will get a better result than you could have achieved alone.

Final thoughts

Coding is complex, so having these incredible tools by your side is truly a shift in how things will be crafted moving forward. AI will touch every pull request, bug fix, and product release, and I say that optimistically. The more we can understand and master our tools, the more prepared and productive we will be moving forward. With that said, things are moving quickly...dare I say too quickly. Trust, but verify, has never been more apt in this context than it is today. If you are a software engineer, engineer adjacent, or a maker, this is the now and most certainly the future. I'm very interested to hear how your experience has been working with these tools. Have you tried them? Had similar experiences or learnings to me? Let me know, and have fun!