Working with the Azure Storage REST API in automated testing

Testing! Every developer's favorite topic :). For me, if I can save time through automation, then I'm interested. Automated testing for a developer typically starts with unit tests, which, even if you don't subscribe to TDD, you've written at least one of them to see what all the fuss was about. Like me, I'm sure you saw that testing complex logic at build time has considerable advantages in terms of quality and taking risks. However, even with the most comprehensive tests at 100% coverage, you still have more work to do on your journey toward a bug-free existence.

Given that most modern applications rely on a wide variety of cloud platform services, testing can't stop with the fakes and mocks...good integration testing is where it's at to get you the rest of the way. Integration testing is nothing new; it's just more complicated today than it was even a few years ago. A tester's job is to test your application's custom interface, data, and interaction/integration with the dozens of 3rd party services and SaaS providers. Thankfully, we've agreed on a common language...where there is a service, there is a REST API. This post demonstrates how to work with Azure Storage, free of the SDK, in a test environment like Runscope or Postman.

Authorization

The worst part of working with this particular API is getting through the 403. The docs are comprehensive regarding what you have to do, but they are very light on how to do them. With that said, There are two primary ways to accomplish this. You can build a custom Authorization header or generate a Secure Access Signature (SAS) and pass that via query string. In the following, I cover both approaches. However, I highly recommend using SAS for simplicity.

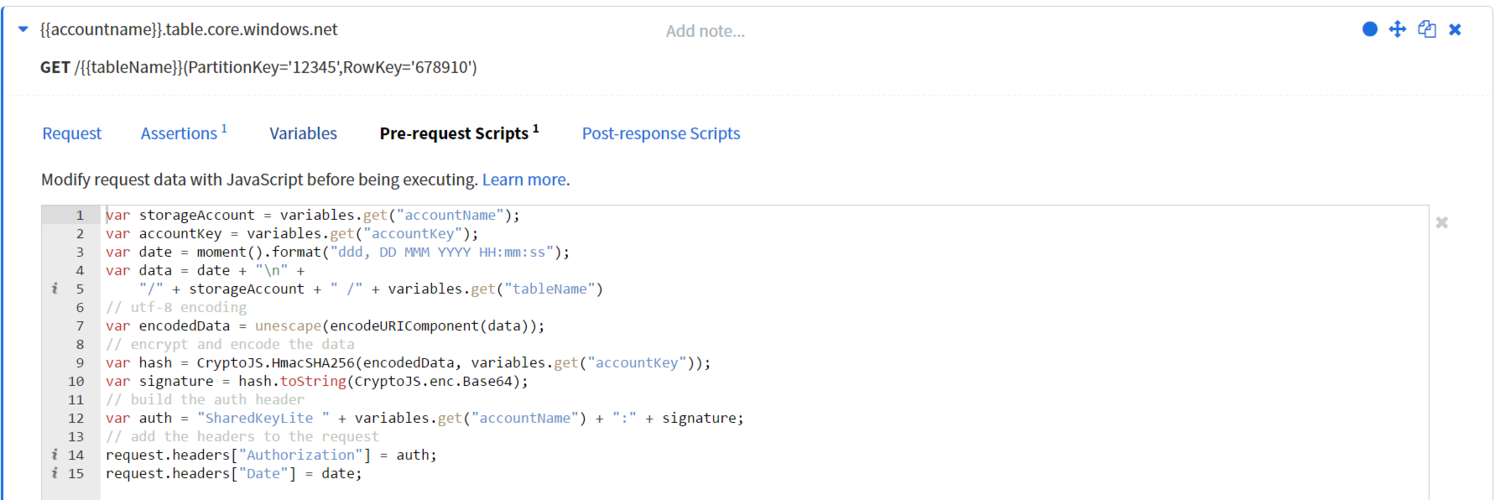

Generating and using a Shared Key Authorization header

The short of it is that you piece together a custom signature string, sign it with the HMAC-SHA256 algorithm using your primary/secondary storage account key, and BASE64 encode the result. If this sounds complicated, it is. Here is the full dump on SharedKey authorization from the Azure docs. The following is an example generation script and how you could use it in Runscope, my favorite tool for testing APIs.

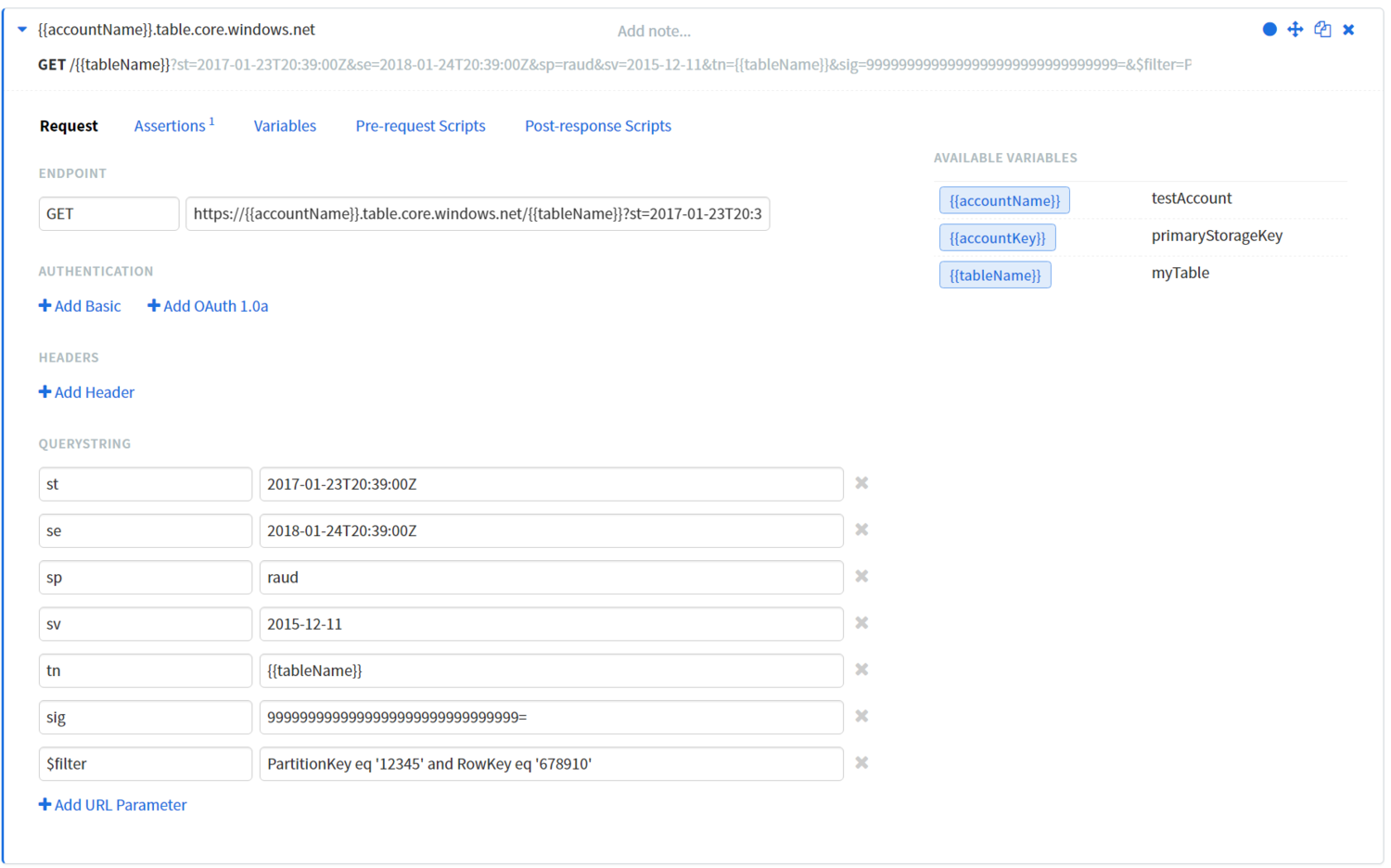

Generating and using a SAS

Did I mention this was easier? There are many common ways to generate one outside of using code and the SDK. The most obvious is using the Azure Portal. Just navigate to your storage account blade and look for the Shared access signature option on the left menu. The other option is to generate one using the excellent Azure Storage Explorer tool. Once authenticated, tree down to the account or resource you want to access and use the context menu to generate the signature. If you want tight control over security, I suggest using Storage Explorer because it has an interface for generating signatures on specific tables, containers, and queues. On the other hand, the Portal only has an interface for the account-level signature (at the time of writing). Now that I have my SAS, here is what it looks like in Runscope:

Wrap up

Now that you're getting a 200, you can move on to writing your assertions. By default, the ODATA response you'll get back is in the Atom XML format, which makes writing your Javascript assertions more difficult. To get the result in JSON, be sure to add an Accept request header with the value application/json;odata=fullmetadata. Happy testing!